Data and Dialogue: Retrieval-Augmented Generation in nodegoat

CORE AdminWe have extended nodegoat in order to be able to communicate with large language models (LLMs). Conceptually this allows users of nodegoat to prompt their structured data. Technically this means nodegoat users are able to create vector embeddings for their objects and use these embeddings to perform retrieval-augmented generation (RAG) processes in nodegoat.

This development connects three of nodegoat’s main functionalities into a dynamic workflow: Linked Data Resources, the new vector store (nodegoat documentation: Object Descriptions, see ‘vector’), and Filtering. The steps to take are as follows:

Vector Embedding

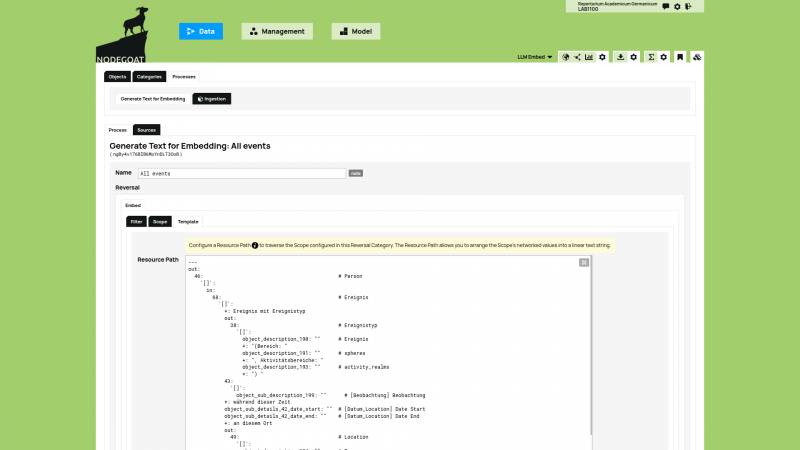

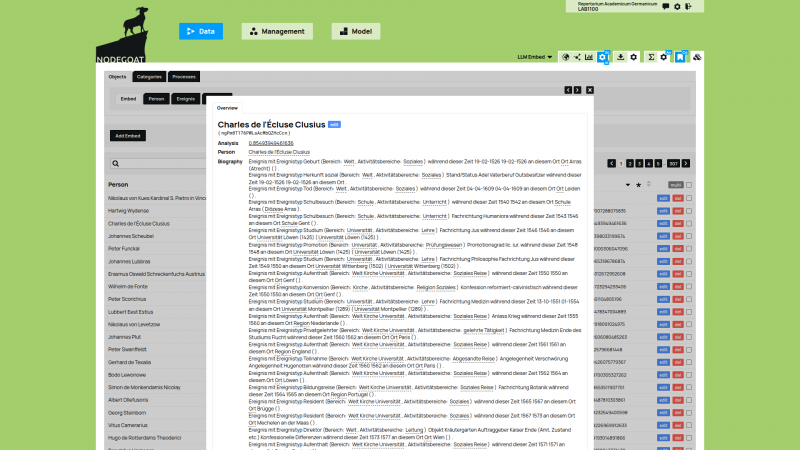

The first step is to use one or multiple Reversed Collection templates to determine the textual content for each Object. This step transforms any dataset stored as structured data into a textual representation that can be used as input value for the generation of a vector embedding. This allows the user to select only those elements that are relevant for the process.

|  |

Next, the textual representation of each Object is sent to an LLM in order to create an embedding for each Object. The communication between nodegoat and an LLM is achieved by making use of Linked Data Resources and Ingestion Processes.[....]